Devlog 3 - Projection

A more focused devlog today, a fun, weird technical one, where artistic overhauls require large technical overhauls too, and we contemplate the roles of game engines (and external tools generally) in our workflows.

On Screen, At the Screen

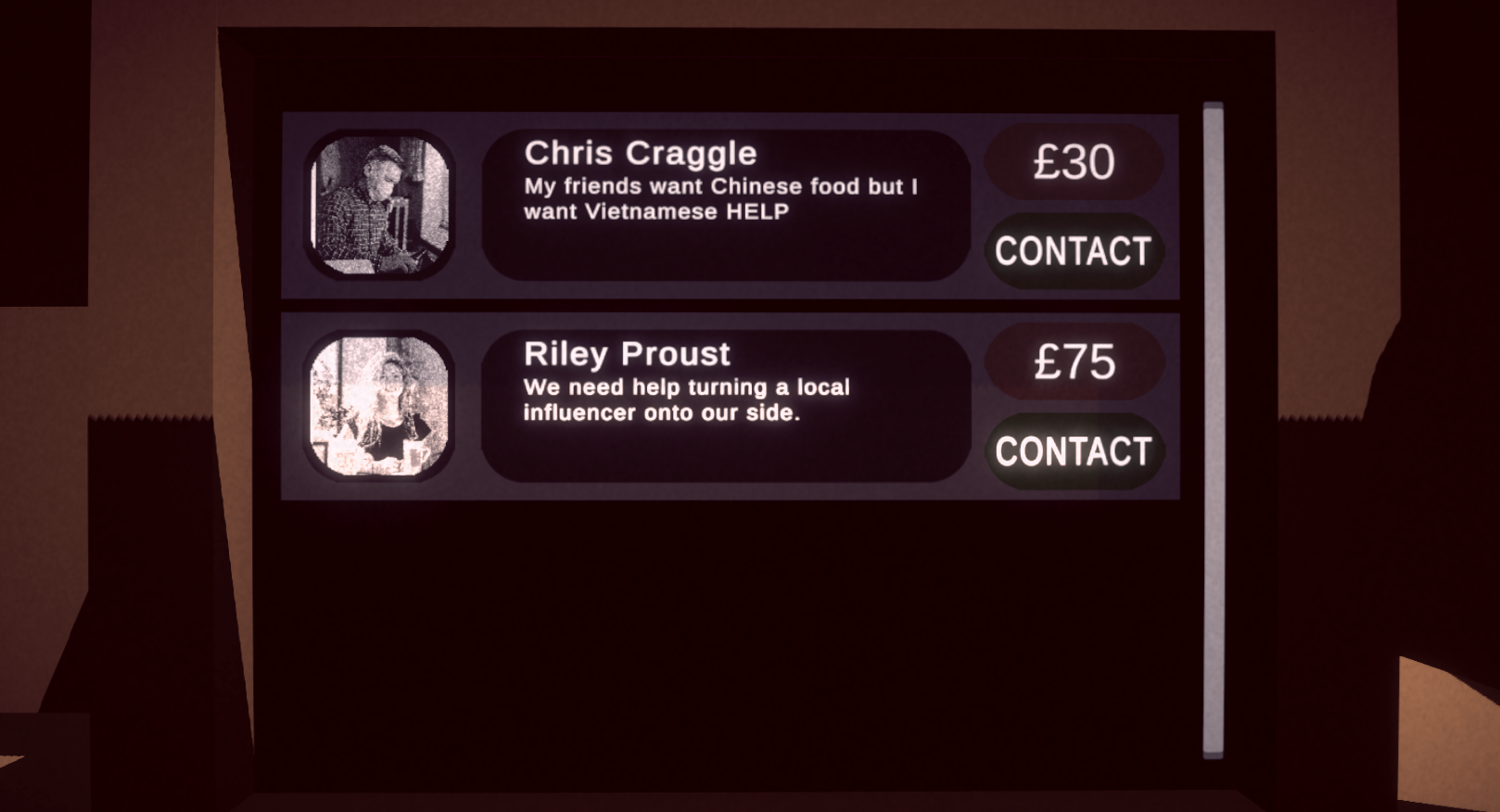

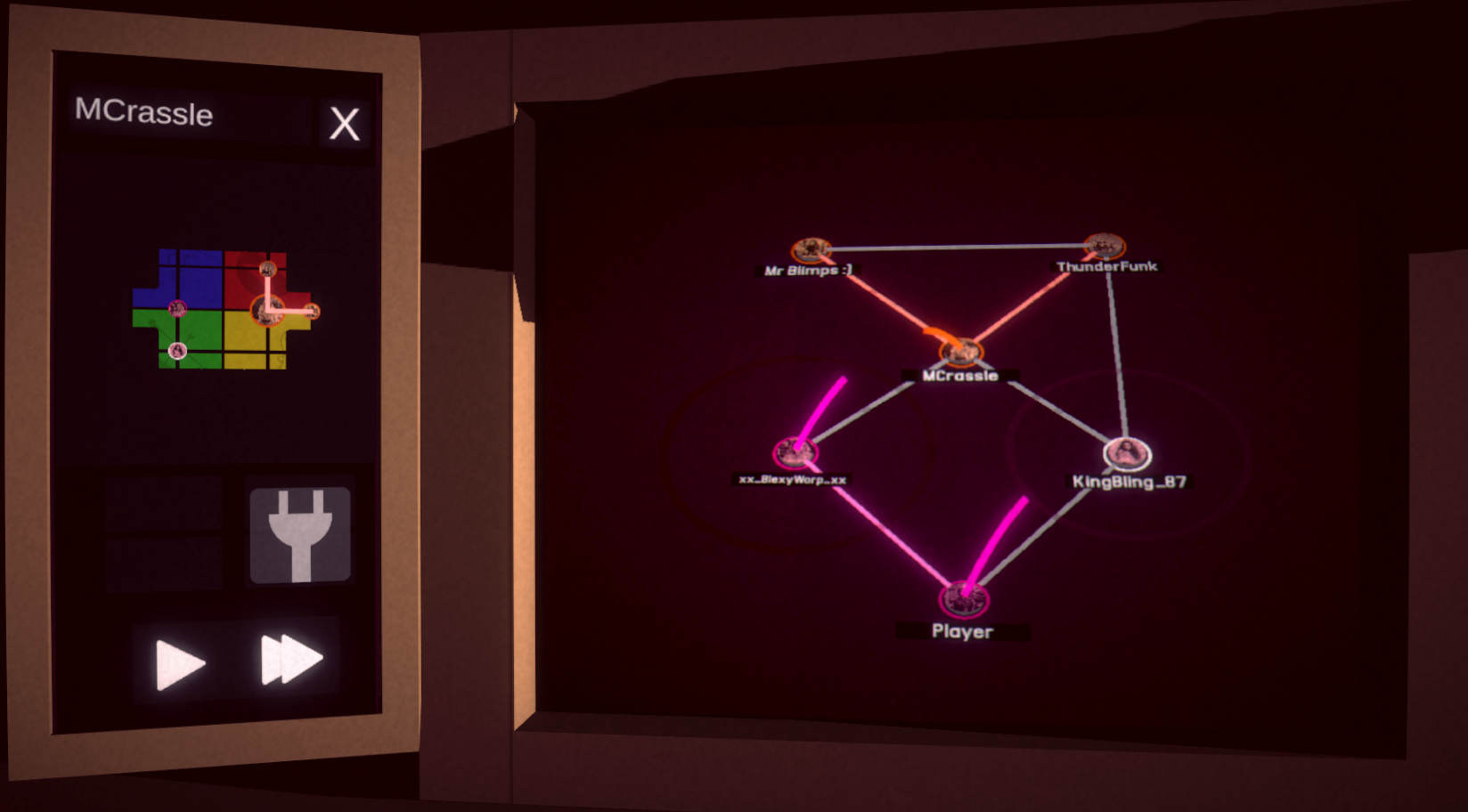

As I teased in the last blog post, I’ve undertaken a pretty major visual / interactional overhaul for Inoculate, putting the entire previous game view on a set of in-game “Computer Screens”. This was something I’ve wanted to do for a while, and for a few reasons: For one, there are significantly more options for the kinds of content I can display, while still ensuring the entire experience feels coherent. It also gives the game a more wholistic visual identity than a purely abstract view could provide. Finally, it gives some of that sense of “contextualization” that I talked alot about in the last blog post, grounding the game’s abstract mechanics in an at least partially real world.

It’s weird to start a Devlog with the final result of my labour, only to then work backwards through how I achieved it. Yet, it's also accurate to the experience of making this all work: I knew exactly what I wanted, underwent a process to achieve it, and then achieved essentially exactly what I wanted. But that process of getting there was itself interesting and notable, and so still worth analyzing imo!

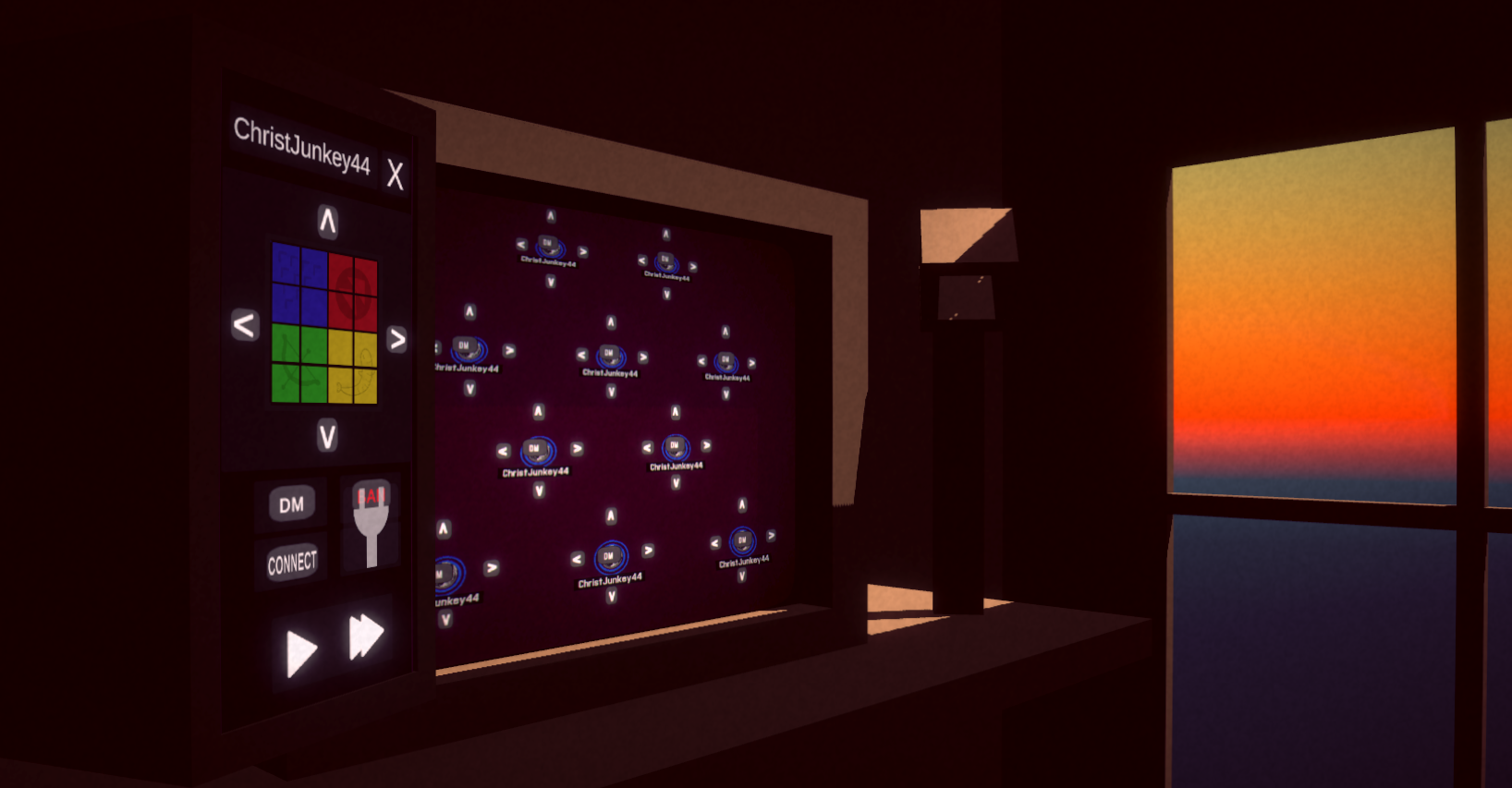

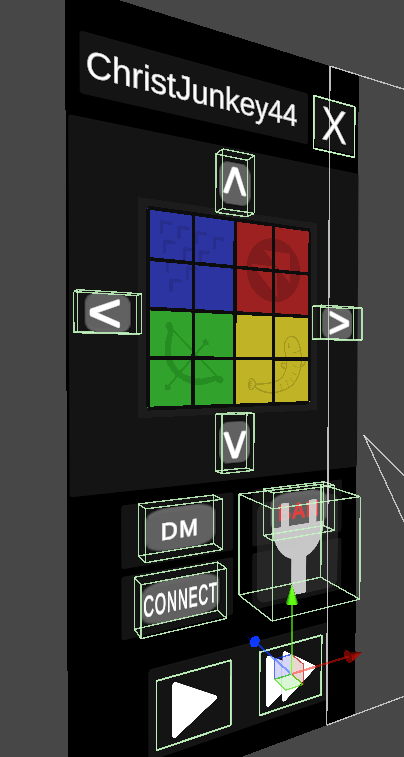

To start with, I built the current visual set-up, which obviously is still just the barest of greyboxes. Getting the game to actually render to these screens was relatively simple. Splitting what I’m terming the “Map View” (i.e the one with all the Nodes) and the “Axes View” (the one with specific Node info), I essentially just pointed cameras at them, rendered those cameras to a render texture, slapped the render texture on a material, slapped that material on a plane, slapped that plane on the front of the computer and that was it! Easy!

For what it’s worth, there are probably some of you wondering why I didn’t just slot at least the Axes UI elements directly on to the screen, instead of doing this Camera pointed at UI approach. I have my reasons (though we’ll be talking about some of the problems it caused later), but my answer is two-fold. For one, this approach helps unite the interaction system across screens, and has the added benefit of making it incredibly easy to swap out what a screen is displaying at any given time, just by adjusting what's being rendered to the render texture. That said, it'll all also definetly be getting tweaked over time: Stuff like swapping the UI buttons for “physical” buttons or having certain pieces of information be displayed via analogue means, all to make this machine the player now uses a more… machine-y feel. As I said earlier, having a variety of options to display different kinds of content is really what this whole aesthetic is for, and while that will definetly be refined (and made more performant!), these two visual / mechanical options for UI / UX is going to be a really fun thing to play with.

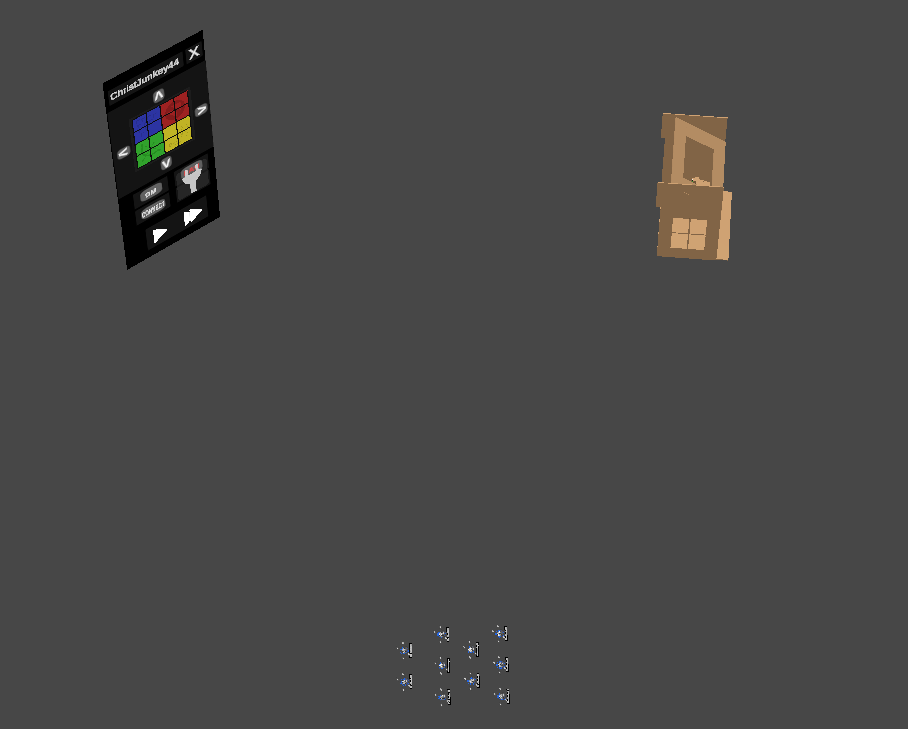

As a fun extra, here's some of the game elements from the scene view. You can see the room is specifically angled / position to not be looking at HUD / Map view. A very goofy solution to a problem that's VERY easily solvable in a number of other ways.

Interfacing

Of course, the easy part is getting a camera to render a pre-existing thing; The harder thing is to get interactions with that previous thing to work again. Essentially, the way interactions worked before was simple: The player hovers over / clicks something with their mouse, if it’s a Unity UI button we just trigger than interaction, and if not we shoot a raycast out based on the mouse’s position, see if it hits an intractable, and then trigger whatever interaction that’s designed to trigger. The problem is now that, in order to get some of those same functions working but with content "inside" a screen, we now have to do multiple checks and translations:

- What game screen is the player trying to interact with

- Where on the screen itself are they hovering over / clicking

- What position does that correspond to "inside" of said screen

- Determine if that internal position corresponds to any intractable

- If so, trigger the interaction

To begin with, because I knew we’d have multiple screens which needed essentially identical functions, I created a generic class that would help with all of the above steps. This sits on any on the planes we made previously that display all of the content, and are fed a reference to the camera whose content’s they are displaying. With that, I made it so there is always a raycast shooting out of the mouse’s location, and if said raycast hits a screen, we know the player is attempting to interact with the contents of it. This solves step 1.

From there, step 2 is relatively easy: Unity has an internal concept called “Bounds”, which return the dimensions of a given object. With that, we can use the origin point of the screen object to convert the point on the screen that our initial raycast is hitting to a purely relative position (0,0 being the center, 1,1 being the top right corner, etc).

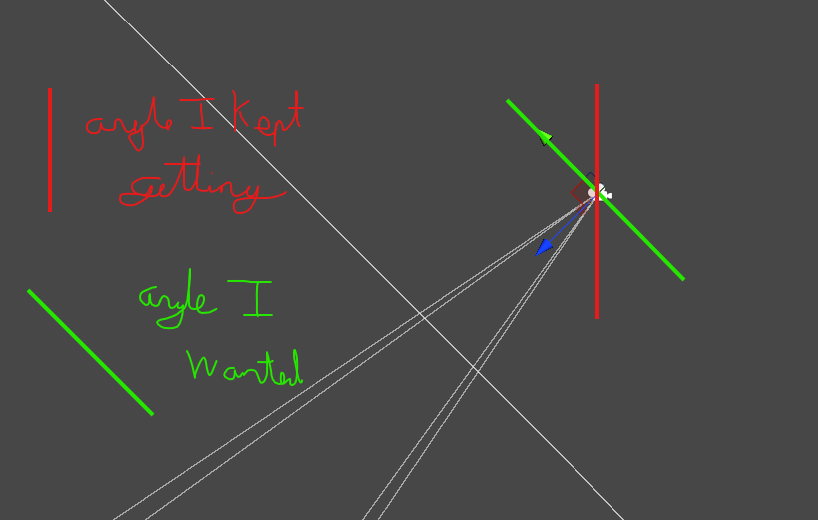

Positioning

We’re now onto step 3, converting that relative screen position to an actual world space position, relative to the internal camera, and this is where I run into a rather embarrassing problem related to Vector math. Essentially, the Map View camera is at a slight downwards angle, which means any sort of relative "vertical" position that we try to input needs to be "tilted" to match the angle of the map view camera (i.e also moving forward in world space). The thing is that the math required to pull this off, while not particularly complicated, was just beyond my ability to implement at the time. Embarrassing! Even as I think about it now, I think about how not complicated it is, yet the act of trying to do it by hand just swallowed a massive amount of time.

At a certain point, I just got tired of trying to figure out a proper solution to the problem. Instead, I opted for some classic Unity bullshit. I already wanted a cursor to appear when the mouse moved over a screen, if just for the purposes of testing this output position. And, well, that cursor needed to be tilted to match the angle of the camera to appear correctly… which was easiest to achieve by having it be a child of the camera… and that meant that it’s local position was, essentially, angled (i.e moving it "up" in local space would move it a bit "forward" in world space, at the desired rate). This meant I could use this cursor to kill two birds with one stone: I’d move the cursor’s local position by the relative input Vector2, which would automatically move it at the desired angle and put it at the correct position. With that, I just used the cursor’s position to fire a raycast from to check for interactibles. This means that even in the current build, where I have turned the cursor invisible, the object itself is still there, still being moved around, as a way to shortcut me past some math I was struggling with.

This solved step 3, and step 4 / 5 should have been relatively easy from that point, right? Use the same systems I had previously for checking / triggering interactibles, just now utilizing the postion / raycast I was shooting from the Map / Axes view cameras. Well, only kinda. For the interactables that had already been working via raycasts hitting colliders, this basically worked without issue. But. There were also the interactibles that worked via the Unity UI system. I assumed getting these to trigger via a raycast would be pretty easy, given I had utilized this functionality when using Unity’s VR package in the past. But, for this project, which is not a VR game, and one where I didn’t want to install said package and fake it being a VR game just for this simple interaction… well the classic Unity bullshit swung backwards in my direction.

There were some semi-complicated ways to try and essentially force this functionality, but to be honest? I didn’t care for it. Doing a bunch of work just to pipe back into some in-built Unity functions, some of which I have other problems with, just didn’t seem worth it. So I decided to build my own button class. I tied it to a Serializable event, which is triggered if the player attempts to interact with it. This can also be further customized to reference functions on game objects from other scenes if required (Manager classes being the main example). I even store the mouse’s prior raycast information so that I can check if the current raycast has just started or stopped hovering over a button, allowing for hover based features. All this to say, it’s annoying to have to recreate features you’ve already implemented, but it's also hard to deny the tangible benefits of implementing your own solution.

One Man’s Tools

I want to stop and consider the previous section, as I find it represents one of the weirdest parts of working within a commercial game engine, or really any external toolset.

In the first instance, trying to translate an on-screen position to a camera-based position, I found myself "saved" by an external toolset. I was struggling with some relatively basic math, and then I realized that that it was math Unity does all the time without issue. And thus, via a slightly jank object parenting operation, I was able to hand off the need to figure out that math, and moved on with my day.

In the second instance, I had a relatively simple procedure I wanted done, interacting with UI via a raycast, but due to internal, arcane reasoning, Unity just refused to allow this to happen. I could have utilized one of many awkward, cumbersome work-arounds to try and get this all working again. Or, I could do what I ended up doing: Code it all myself, saving myself the trouble of managing an inherently flawed system and giving myself the benefit of full and complete control of how it works.

This is the thing about external tools: we often don’t really know how it does the things that we ask it to do, and moreover, that is often the point. I very much enjoy that there’s alot of stuff Unity can do without requiring much thought from me, from the complexities of physics interactions to the basics of rendering the game at all. But by this same process, I also deny myself the ability to learn how to program these things myself. I could, ofcourse, simply program an entire game-engine by myself. Am I advocating for this? Not really? The reality is that not jsut is that really outside of my desired skillset, but it would also just massively slow me down in a way that my goals simply don’t necessitate nor allow for.

But this is all also important to reckon with because of the amount of time I spent trying to get this external toolset to do things for me, hours I lost specifically because I wholeheartedly believed that the toolset should manage those things for me. The time I spent trying to make Unity solve my problems for me was, perhaps unsurprisingly, far longer than the amount of time it took to simply write my own versions of the scripts that functionned exactly how I wanted them to.

This is really the epic highs and lows of external toolsets: The highs of it simply doing stuff for you, or learning how to utilize and manipulate the tools to get it to do even funkier stuff for you; The lows of the stuff you don't learn how to do, and the struggles that stem from fighting the toolset to do those things for you. Another way to frame this is, in essence, tools mastery and tools reliance, the former about your ability to know how and when to use the tools, and the latter being about your inability to not use the tools and/or to prioritize their use above all else. And I think it is hard to fully separate one from the other.

I contemplate this as I think about how my primary game-making engine is Unity, and why, despite my experience in Unreal, Godot, Gamemaker, etc, I don’t use those tools instead. I have my reasons of course (the game jam build was in Unity, Unreal would be too heavy, Gamemaker too light, Godot probably could work but maybe for a project with slightly lower stakes). But I think the reality is this: I am most familiar with Unity, having the most mastery over it and, in turn, some reliance on the things I know it can do for me. Were I to move over to those other tools, I wouldn't have that mastery, and in the process of learning them, I would encounter just as many issues and just as many hacks to overcome their problems as I do with my problems with Unity. At the enbd of the day, I would be forming another symetrical, mutually beneficial if slightly toxic relationship with any other game engine as I have with Unity. And for now, what really keeps me in Unity's grip is simple: Better the devil you know…

And that’s it! In summary at least. I skipped over basically restructuring everything about my InputManager because while huge it was also a bit dull. Each screen’s content also had its quirks to re-implement, like how clicking and dragging on the map view needs to move the in-game camera, or scrolling the mouse wheel needing to zooming in and out, which took some finagling.

This also doesn’t cover some little aesthetic touches I’ve done, like making a little greybox room around the computer screens, doing a custom post-process pass on that camera view, and even implementing a “Sun” that moves in sync with the current game speed. These touches, while small and basic at the moment, are the kinds of things Inoculate needed: Context, a sense of space, the sense that the world is far larger and more real than the abstract map you play the game on. It is still early days, but it’s exciting to have spent a few months with this vision of the game in mind and now, in the course of a couple weeks, have it so totally in.

And for a final tease in prep for the next devlog, is that, as I said previously, what’s displayed on the screens is totally arbitrary, and can be swapped in and out at any time. This allows for a very fun way to implement a framing device for the whole game…